Dual Track Agile: The Experience so Far

Last September my team and I decided to test a new methodology called Dual Track Agile for the development of our product (a simple mobile game called Game Arena). The main principles of the methodology are listed in this post I published on October 7th. After 4 months I thought it would be nice to tell you about this experience, how we applied it, what worked and what didn’t work for us.

Premise

The scenario was not ideal, because we were trying to adopt this technique for mobile application development. One of the principles of the Dual Track is to release features often, possibly daily, if not twice a day. With native apps this is not possible because some “technical” time is required to package the app and publish it on the stores. Plus, in case of iOS, you need to wait the approval. Finally, the policies of the stores don’t allow you to release apps with elements just partially developed. So releasing “fake” features to measure the interest of the users, is just not applicable.

We were aware of those limitations, but we also knew there was margin for improvements compared to our previous approach. We decided to be as fast as the technology would allow us to be.

Daily routine

Dual Track Agile is based on the principle that every day the team has to work on two tracks: discovery and delivery. In short, discovery is searching for the solutions and delivery is implementing them. We knew that, but we didn’t know the amount of time that we were going to allocate to each activity. It looked like the only reasonable way was to see it day by day.

We decided to keep our daily appointment, the so-called standup meeting, changing it into something different: a daily meeting, that could be just 10 minutes if everybody knows what to do, or much longer if we need to discuss about the stories to approach. We used this time to discuss about technical solutions too. In a way, since there was no more “sprint planning” it’s like splitting and spreading the planning into several pieces, each one faced exactly when the team need it.

The tools: setting up the board

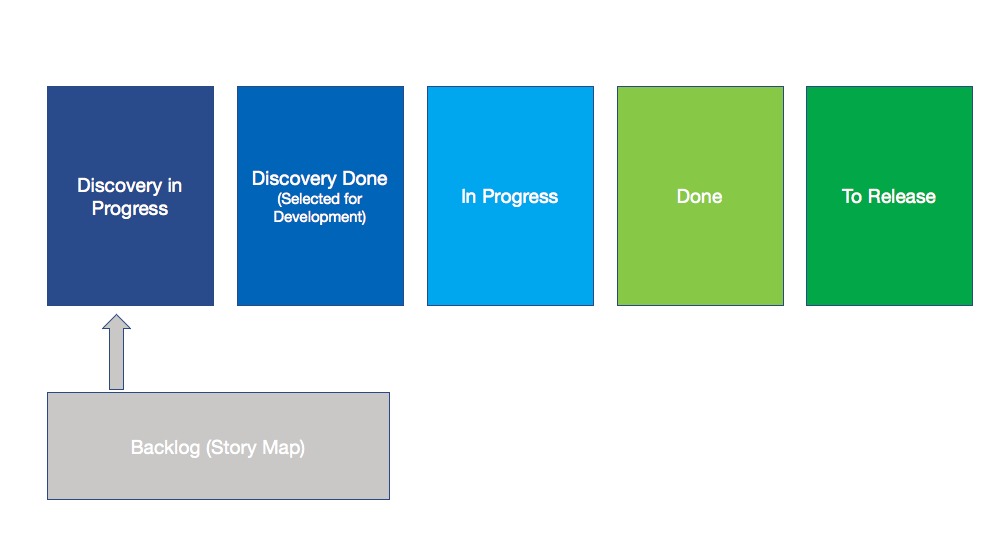

Since we were already using Jira, the move to Jira Kanban board was a natural choice, but with a few adjustments. The default workflow for a kanban board is: Backlog, Selected for Development, In Progress, and Done. Normally it’s exactly what you need, but in our case there were 2 problems: first, there is no information about tasks currently in discovery; second, there is no difference between Done and Released, that for us was important.

So we decided to go for the following approach:

1. Use the Backlog to store all the possible user stories, including the ones that are not validated yet (name it Story Map). Since they are not validated, they are not visible in the kanban board. You can only see them in the Backlog view.

2. From the backlog, we pull stories for which we want to start a discovery. Discovery in Progress is our first column in the Kanban board. When there are bugs that we need to solve, but we don’t know how yet, they are also added to this column. Stories stay there for all the time required to make analysis, prototypes and tests.

3. Once a Story has been validated, we move it to the next column which is Discovery Done. This means the story is ready to be developed. At this stage we add an estimation in terms of hours required.

4. When someone starts working on that story, the story goes to In Progress. During this stage, each day we log the time spent on this activity. When it’s completed, it becomes Done.

5. The fifth column is To Release. This means the feature has been packed into the app and the app has been submitted to Google Play/iTunes Store (so not live yet).

For back-end activities we use the same schema, with the only difference that Released actually means live.

Of course this is strictly personal, what worked for us maybe won’t work for you. If you have suggestions, feel free to comment.

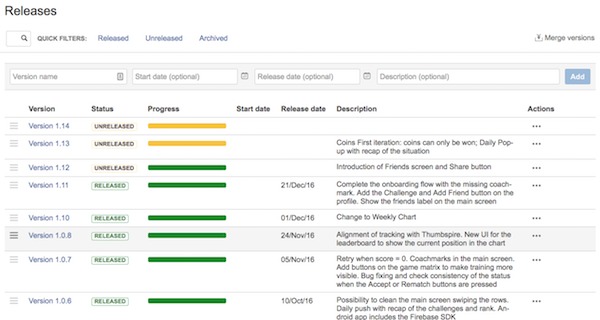

The tools: releases and workflow

We discovered very soon that using Releases was very useful. Each time a Story is ready for development, we assign a release version. Since we don’t want to plan too much in the future, this helps us visualise when we want this new feature to go live. For example if we are working on version v1.05 we may decide that this feature will go live when we will release the v1.05, or the next one (v1.06), rarely two versions from now (v1.07) but no more than that. Either the story is urgent and useful to improve our KPIs, or we just remove it from the board.

When all the stories of the current version, e.g. v1.12, are Done, we pack and submit the app to iTunes and Google Play (*) and the stories marked v1.12 go to the last column (To Release). Once the app is approved and goes live, we use the Jira Releases view to release the version. This action changes the stories to In Store status, which make them disappear from the Kanban. Doing this at the right time will allow us to keep a history of all the versions of the app, together with the date of the release and which features went live precisely. This is fundamental when we need to compare the KPIs of the different versions.

The first test and the definition of the OKRs

One of the principles of the Dual Track is the usage of the OKRs to guide the development. Each product manager should set the objectives and the key results of the products for the next quarter.

Since our product was new, we didn’t have any data at the beginning. So the first thing to do was running an acquisition campaign to see the stats. To be able to measure conversion rate and retention properly, we wanted to get around 1000 installations in no more than 3–4 days. This was much more difficult than expected (actually we had the time to release 2 more versions while waiting for the campaign) but at the end we did it.

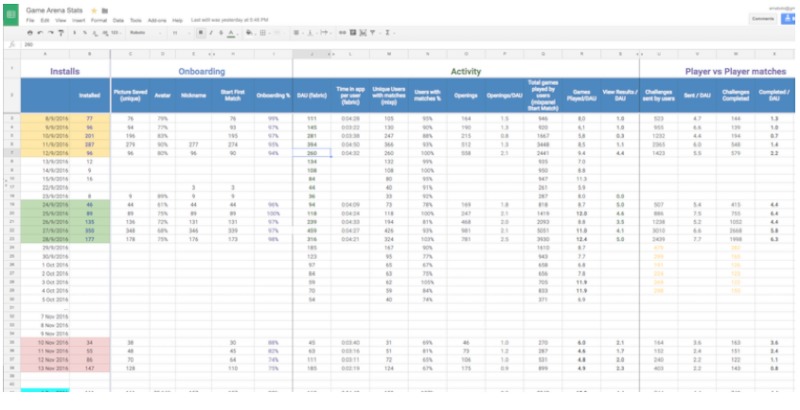

We decided to focus on 2 main key results: conversion rate (players completing the registration and profile) to see how good the UX was designed, and retention, which is the main parameter to measure the “happiness” of the players. We also measured a lot of other data, for example the percentage of challenges that were accepted by other players.

Improving the metrics

We analysed the numbers with the team and we decided what was good and what was not good. A conversion rate of 95% was undoubtedly good, while a 75% of challenges sent that remained un-answered by the other player, was definitely not good. And a Day-1 retention of 20% was not good enough.

Here is where Dual Track Agile could really start, because we asked ourselves: what can we do to improve these metrics? Since then, all the features developed were always based on this question. The only exceptions were bug fixes and technical debt.

We created a spreadsheet with all the possible data taken from all the sources of information we had. During campaigns, data were analysed daily, with a final assessment a few days after the campaign is finished.

What’s really good about this approach is whatever answer we give to a problem, we will eventually see if the answer is right or wrong. Every time you release something, you have to measure the outcome, and the only way to be successful is to do all of this continuously in a fast and smooth way.

As Gabe Newell recently said during an interview:

It’s the iteration of hypothesis, changes, and measurement that will make you better at a faster rate than anything else we have seen.

The first iterations and the following ones

The first iteration was focused on improving the percentage of accepted matches. A simple change to the algorithm proved to be extremely useful, because we immediately increase this number to 75%.

During the next releases we were able to improve many numbers: accepted matches went to 87%; the numbers of games played per day per player, raised from 8 to 14; the average time spent on the app increased from 4 to 5 minutes.

But the most important number for us was the retention: how often people go back to our product. In our experience this was the hardest key result to achieve. It took 3 months to see the first improvements in terms of retention: finally, in the last test made in December we measured a Day-1 retention of 33% and a Day-3 retention of 15% (2 times the retention measured at the first test). Of course it’s not enough, but it’s the proof that what we did had an actual impact on the metrics. Cool!

What didn’t work so well

Being really lean requires a lot of discipline. When you have an idea about a feature, your first instinct is to create something perfect. The first question is always “how should this feature work” but instead the right question is: “what is the minimum change we can do to the app to test if this feature is useful or not”.

It’s true that our app was up and running, nicely designed and developed and fully working, so we didn’t want to create a mess with the product or the code. Besides games are complex and if you really want to measure the retention, you cannot show a poor quality product to the users, so compromises in this cases were necessary. However I feel that with some more discipline we could have been faster. The average was 2 releases per month, I think it’s perfectly doable to increase to 3.

And… what worked

Seeing the numbers sometimes can be difficult, because numbers are never as good as you would like them to be. But knowing the metrics, seeing them grow (or decrease) depending on what you do, decide the next steps together, based on the results of the previous iterations, it’s a completely different way of working. There are no secrets and there are no mind tricks to motivate the team. The motivation comes from being aware of the results of what we do every day, and from deciding things all together, because we have a common goal.

Regardless of the future of our small app, the methodology was a winner. It doesn’t matter how you call it, dual track or something else. Actually we adapted it to our needs, so maybe it’s not even what dual track agile was supposed to be. Just like products, methodologies should be improved with several iterations. What we did it’s maybe not perfect, but we know it’s a step in the right direction.